Contents

More people are turning to AI assistants like ChatGPT, Gemini, and Perplexity instead of Google. These tools aim to deliver quick answers, often pulling information from external sources.

For editors, SEO specialists, and website owners, this shift means adapting to a new search landscape. Unlike traditional search engines, AI chatbots don’t rank sites — they generate responses using different algorithms.

This article explores how to optimize your website for AI assistants: how they source content, integrate it into answers, and what boosts your site’s chances of being referenced.

How AI Assistants Search for Information

In this article, when we refer to AI assistants, we mean services that accept queries in natural language and generate coherent responses themselves. These aren’t simple chatbots that work from scripts, nor are they search engines with traditional lists of links.

AI assistants include ChatGPT, Gemini, Perplexity, and Bing Chat — neural network-based interfaces that work on the principle of response generation rather than ranking.

We use this specific term because it most accurately reflects their essence: AI that helps find and process information, but does so differently than Google or other traditional search engines.

AI assistants don’t show a list of links — they immediately generate an answer. This is the key difference from traditional search engines, where users choose which website to visit.

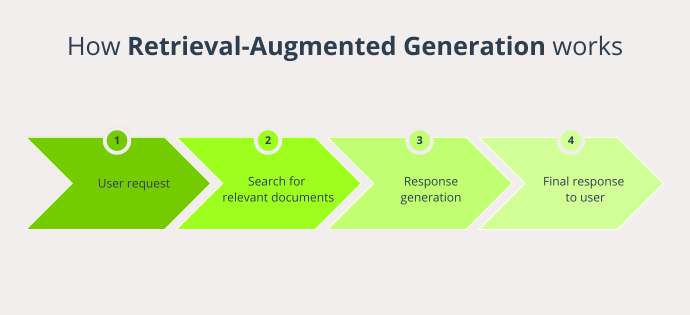

Most modern AI assistants are built on the Retrieval-Augmented Generation (RAG) approach. This works in two stages: first, the model finds relevant documents, then uses them as context for generating an answer. The final text is written by the model itself and may not contain a single direct quote.

This is convenient for users: they get a complete and coherent answer without clicking through links. But for website owners, this creates a new challenge: for content to catch the AI’s attention, it must first pass selection during the retrieval stage, then “appeal” to the model enough to be used in the response.

Search Engine vs. AI Assistant: How Results Are Generated

| Traditional Search (Google, Bing) | AI Assistants (ChatGPT, Gemini, Perplexity) | |

|---|---|---|

| Result | List of pages by relevance | Single answer generated by the model |

| Presentation | Headlines, snippets, URLs | Coherent first-person text |

| Information source | Real-time indexing | Database (static or updateable) + search within it |

| Selection mechanism | Algorithm-based ranking (PageRank, BERT, MUM, etc.) | Retrieval + generation (RAG, Synthesis) |

| Links | Always included | Sometimes (Perplexity, Bing), often not |

| SEO role | Keywords, page structure, backlinks | Structure, clear formulations, source reputation |

| User behavior impact | High: behavioral signals, CTR, time on site | Minimal or absent |

| Update frequency | Continuous | Model-dependent: from static database to real-time crawling |

| Visibility control | Relatively high (through optimization, webmaster tools) | Partially possible, but much less predictable |

Why AI Assistants Don’t Always Link to Websites

In traditional search results, links are the primary output. Users choose what to click on themselves. With AI assistants, the logic is different: links are optional attributes.

There are several reasons why links might be absent:

ChatGPT without the Browse module enabled uses only information that was included in the model during training. These versions don’t have internet access and simply don’t know where they originally got the data.

Even if an assistant has access to real sources, it’s not required to use direct quotes or links. The model’s job is to compose coherent and informative text, not format sources like in an academic paper.

Some assistants intentionally remove links to avoid complicating the user experience. For example, Gemini often provides short answers without indicating where the information came from.

In some cases, assistants use external knowledge bases — for instance, those licensed through partnerships — where the source isn’t publicly accessible at all.

Even if an AI assistant obtained information from open sources, it may not display these sources in the response, especially for simple queries.

For example, if you ask it to describe vitamin D deficiency symptoms, ChatGPT will simply list the main signs without indicating where it obtained this information.

When Links Do Appear

AI assistants handle queries differently.

The more complex or specific the question, the more likely the model will turn to external sources, especially if the query involves numbers, news, or comparative analysis.

For example, the question “What technologies do different search engines use for generating answers?” will likely force the assistant to consult articles or documentation. The same applies to comparisons, reviews, brand mentions, or software requirements — in such cases, assistants more often surface sources and may add links.

| Service | Link behavior | Reliability notes |

|---|---|---|

| ChatGPT (with Browse) | Sometimes embeds links, vague attribution | May cite unused sources |

| Perplexity | Always shows RAG-retrieved links | Same site may cover multiple points |

| Bing Chat | Numbered footnotes in text | Precise mode = most accurate |

| Gemini | Rarely shows links (except with SGE) | Prefers plain-text responses |

How AI Assistants Find Websites

AI assistants don’t scan the internet in real-time with every query. They have their databases that they rely on when generating responses. Some have static databases, others use a mix of trained memory and search mechanisms, while some crawl at the moment of query.

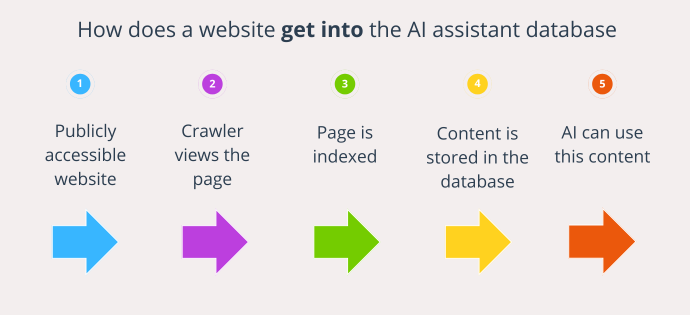

For a website to appear in a response, it must first get into these databases. Here, not only does technical openness matter, but also which sources a particular assistant works with.

An AI assistant’s knowledge base isn’t a copy of the entire internet. Each model has its own set of sources and approach to updating them. For example:

- ChatGPT (standard version) is trained on samples from Common Crawl, Wikipedia, technical documentation, and other publicly available data.

- Perplexity and Bing Chat use crawling: they can access websites at query time or regularly update their own indexing.

- Gemini combines both approaches: some information comes from the model, and some comes from Google’s search index.

It’s important to consider that assistants work differently with current internet data. For example, ChatGPT with Browse primarily relies on Bing’s index. Many generative search services (including Perplexity and Phind) also use Bing as one of their key web data sources. Therefore, even if your audience is more accustomed to working with Google, it’s worth paying attention to how your site appears to Bing specifically — this directly affects the likelihood of your content appearing in AI-generated responses.

But for an assistant to read a page, it must be technically accessible. This means:

- It’s not prohibited from crawling in robots.txt.

- It’s not marked as noindex.

- It doesn’t require authorization or block crawlers.

AI assistants aren’t Google bots: if they see a prohibition, they simply pass by. Even large and important sites won’t be included in the corpus if they are formally closed to crawling.

Content Creation Guidelines for AI Assistants

AI assistants analyze content using the same key indicators as search engines: clear structure, logical flow, and scannable formatting. What works for SEO now also helps chatbots integrate your information effectively.

While no tools yet track how content appears in AI responses, patterns show assistants like ChatGPT synthesize common knowledge rather than copy single sources. Brief, well-structured passages with definitive answers perform best.

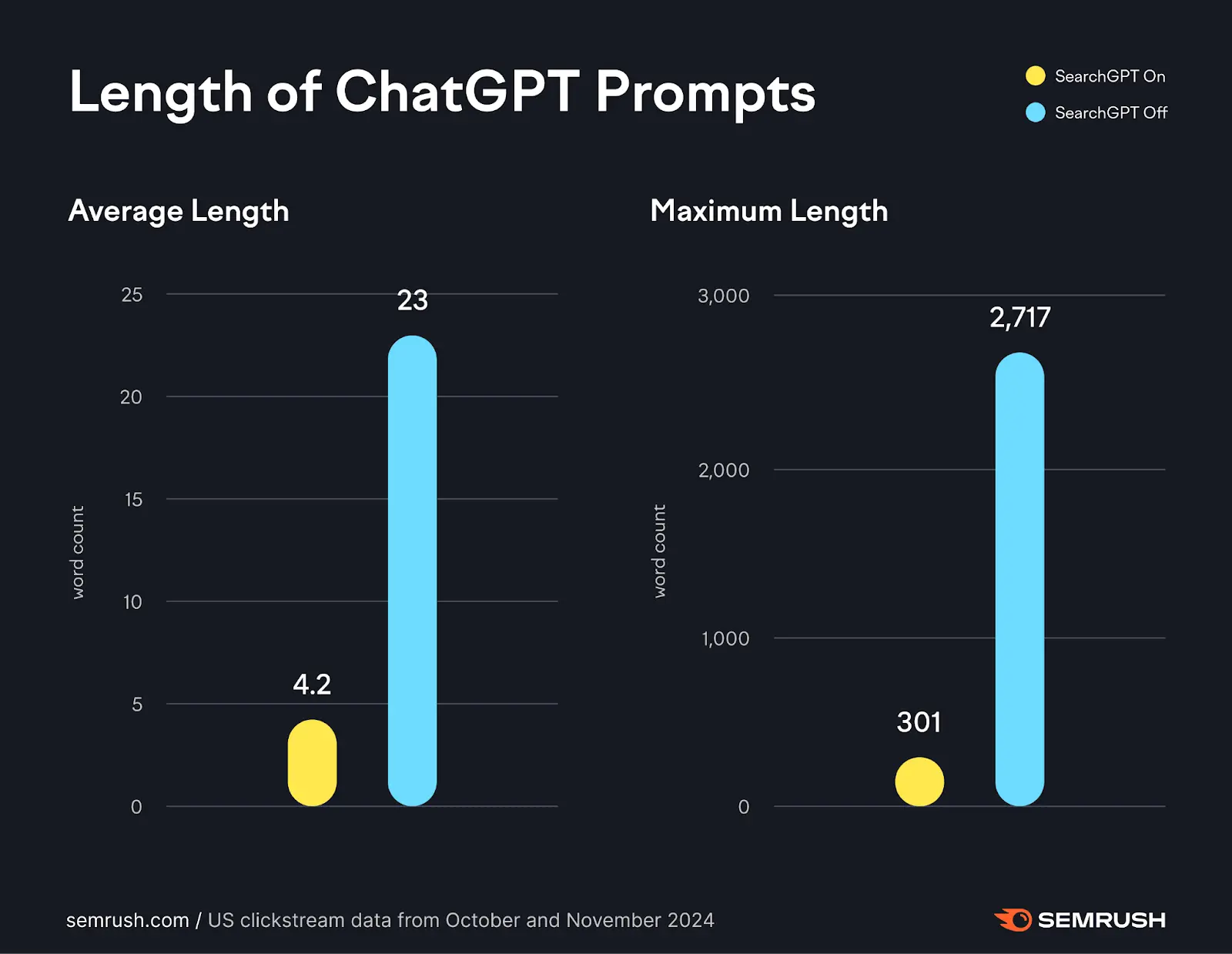

SEMrush data reveals AI queries are more conversational than traditional searches. Users seek complete explanations, not links. Your content must provide immediate solutions, not just pathways to them.

Adding to this is a different character of queries: not factual but practical, not “how much does it cost” but “how to choose.” In such conditions, content works well when it includes:

- Different formulations of the same answer (including conversational ones).

- Rare and atypical questions that still have clear answers.

- Substance — examples, variations, details that help the model generate something useful.

Models don’t search for pages. They search for pieces of text that sound like ready response fragments. Everything that helps this fragment be clear and complete is useful and accepted.

Where You Can Actually Get Traffic from AI Right Now

While most AI assistants (including ChatGPT and Gemini) don’t guarantee website traffic, entry points have already emerged where neural networks actually drive traffic. This isn’t quite SEO in the traditional sense, but the logic is similar: content must be visible, understandable, useful, and located where generative engines can “pick it up.”

Here’s where you can currently get traffic from neural networks:

Appears above organic results with generated answers. Links are possible but rare — priority goes to cited, clean sites with precise answers. Early-stage but growing, especially in English.

Can pull live data but often obscures sources. Links may appear as formatting, not direct citations — answers are generated first, then loosely “anchored” to references.

Designed for transparency: links are embedded directly in responses. Favors sites with high “presence density” (multiple mentions). Delivers direct traffic, bypassing traditional ranking filters.

Brave Search works similarly: it provides a brief summary above search results and shows links. The algorithms there are simpler, but the essence is the same: it’s important to be understandable, structured, and not appear to be the sole source of truth.

Classic assistants — ChatGPT without Browse or Gemini — aren’t currently oriented toward driving traffic. Even if a website is mentioned in a response, links aren’t always shown, and users most often just read the finished text. There are no traffic assessments from such formats yet, but they may influence which formulations and brands remain in the information field.

Checklist: What to Consider When Working with AI-Generated Results

There’s no universal algorithm for “getting into AI results” yet. But there are clear practices that help increase your chances, whether in ChatGPT with Browse, AI Overviews, or hybrid formats.

Neural networks don’t search for pages — they seek answers. To succeed, your content must be noticeable, citable, and easy to parse. AI doesn’t rank pages traditionally but compiles responses based on meaning and tone. The winners are those who provide clear, concise, and ready-to-integrate content, not just top-ranked pages.

What helps in the work:

Your site must be open to crawlers and AI overflow systems. This means proper robots.txt configuration, no noindex tags where inappropriate, and a clear structure with sitemaps and schema.org markup. Without this, no generative engine will even see your content.

Even if it seems “obvious,” it’s not clear to models. Structure (headings, lists, paragraphs) helps algorithms correctly extract fragments that will make it into responses.

AI doesn’t retell entire articles — it pulls out ready, semantically complete blocks. Therefore, it’s important to provide explanations in text that can be inserted into generative responses.

Models form responses based on what sources generally write. The more your content is cited on external platforms, forums, and social media, the higher the chance that formulations from there will make it into responses.

AI queries are much more diverse than classic search queries. “Edge case” questions, complex scenarios, non-standard formulations — this is precisely where models often look for examples for generation. It’s worth adding such blocks to content.

Remember that ChatGPT, AI Overviews, and AI overflow work differently. Optimizing “for everyone at once” doesn’t make sense. It’s better to understand each zone’s logic where citability matters, where structure matters, and where users simply read responses without clicking through.

Generative responses currently work poorly with local data. Traditional search is still stronger here. But it’s worth monitoring Google AI Overviews development — local content may start appearing there first.

Most importantly, don’t get fixated on “new AI optimization.” Models change quickly, and their behavior isn’t stabilized. But understandable and verifiable content with quality structure still wins in any system.

Strategic Content Approach Changes

Additionally, consider how the nature of content strategy itself is changing. As noted in discussions about getting traffic in the neural network era, now that some traffic goes to AI-generated results, it’s worth shifting content lower in the funnel.

If informational content used to help “boost” SEO, now, search and generative formats more often surface materials that help users solve problems.

What this changes:

- Publishing as much content as possible is no longer effective. AI assistants can easily compile overviews themselves.

- Original content value increases — case studies, personal experience, specific solutions, data that doesn’t exist in other sources.

- Interactive elements work especially well: calculators, configurators, checklists — things that help users make choices or understand how to act.

- Don’t chase topics for coverage’s sake if you don’t have real expertise or utility in them. AI easily “breaks down” general noise but will take answers from those who demonstrate quality.

This is exactly why it’s important not just to “create content for neural networks” but to build a strategy around real user value. In this approach, both classic SEO and neural network results work toward a common goal.